‘The greatest shortcoming of the human race is man’s inability to understand the exponential function.’

—Albert A. Bartlett

I’ve written about the many risks in AI investing, but my long-term outlook has always been optimistic.

This often confuses readers, so I want to clarify my view today.

You see, our daily experience trains us to expect gradual change, not the kind of curve AI is starting to climb.

There’s an ancient Indian legend about a king and a chessboard that perfectly captures our blind spot.

The story goes that a grateful king offered the inventor of chess any reward.

The inventor asked for something seemingly modest. One grain of rice on the first square, two on the second, four on the third — doubling each square until all 64 were filled.

The king laughed. Such a humble request.

But his treasurers weren’t laughing when they did the maths. The 64th square alone would require more than nine quintillion grains. That’s more rice than we’ve produced in all of history.

In some tellings, the inventor was executed. In others, he became the king’s chief advisor.

Either way, the monarch learned a harsh lesson about underestimating exponential growth.

The Upside of the Curve

Before we go further, let’s think about the prize at stake.

If AI continues improving at current rates, we may be looking at one of the most significant economic shifts in human history.

Goldman Sachs estimates AI could eventually boost global GDP by 7%. McKinsey sees the technology adding around US$13 trillion in economic value by 2030.

But the future is genuinely extraordinary beyond that.

AI that could handle all daily routine work. Systems that accelerate scientific discovery, extend lifespans, and free humans for more creative and meaningful pursuits.

This isn’t just science fiction. It could be the logical endpoint of trends already in motion.

But looking around, it can be difficult to actually see those trends.

Technologists often call this the S-curve: slow progress, sudden acceleration, then a plateau.

Source: Waitbutwhy.com

AI appears to be entering that middle phase. But why do we struggle to see it?

Wired for Linear

Our brains evolved on the African savannah. The threats and opportunities we faced moved at running speed.

If a predator was 100 metres away and closing at 2 metres per second, our ancestors had 50 seconds to react. That’s linear. That’s intuitive.

But exponential processes don’t work that way.

Something that doubles roughly every year can seem slow at first… until suddenly everything has changed.

This is why folks who track AI progress seem increasingly alarmed while mainstream commentators remain sceptical.

They’re looking at different parts of the same curve.

Below is a 2013 visualisation to give you an idea of what exponential increases can look like.

A doubling of computing power every 18 months may seem innocuous at first, but…

Source: Mother Jones (2013)

This is an excellent example for two reasons. First, it’s easy to see how quickly things can explode.

And second, those 2013 predictions of our top computing power today were off by 525%.

The fastest computer today, El Capitan does over six times more operations per second than their top estimates.

It’s a reminder that every time Moore’s Law has been declared dead, engineers promptly doubled performance again. And now AI is riding even steeper ‘scaling laws’.

Soon, that lake could become a flood.

Data Points Up… Up and Away

The change that’s coming will catch us by surprise — me included.

This may seem baffling to readers who have heard me claim that ‘AI isn’t going to take your job tomorrow.’ That’s still the case.

However, at the same time, AI could be commanding entire sectors by the mid-2030s.

I really mean that.

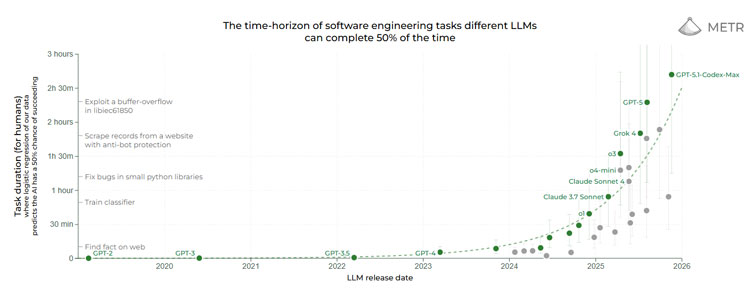

The research organisation METR has been measuring how long AI models can work autonomously on software engineering tasks.

Their findings show the length of tasks AI can complete has been doubling approximately every seven months for the past six years.

Here’s what that looks like on a linear scale:

Source: METR

[Click to open in a new window]

If this trend continues, it has the potential to change the world beyond what our linear minds can comprehend… Of course, there’s always a possibility that things could slow.

AI scaling is an arcane mix of physics, raw industry, and data. Any of which could become a challenge — but let’s stick to the bull case.

Current frontier models can now complete software tasks that would take professionals two and a half hours in mere minutes.

Pushing this trend out, we’d expect AI agents to independently complete tasks that take humans days or weeks within the next few years.

By late 2028, they could complete tasks that would take us 5 days…

But by 2031, we’re talking 85 days’ worth of work.

Let that sink in. We’re not measuring marginal improvements anymore. AI is now capable of projects that would be worth a business quarter’s worth of work from us.

The difference between AI that can reliably handle two-hour tasks and AI that can handle multi-week tasks isn’t just more capacity. It’s a fundamentally different economic reality.

But this is when the scale tips into a whole new paradigm.

By 2033, we could have an AI capable of producing work equivalent to 32,800 hours of human effort.

That’s nearly 4 years of human effort condensed into mere hours for AI.

By mid-2035, AI could complete 59 YEARS of independent work in a handful of days.

Here, AI is capable of multi-decade development arcs and a level of compressed thinking that leaves us behind.

And that reality is just nine years away.

If news came that advanced Aliens from a distant galaxy were arriving in nine years, you can imagine the world’s reaction.

And yet, here we are, laughing like the king in the story.

The Second Half of the Chessboard

Futurist Ray Kurzweil coined the phrase ‘the second half of the chessboard’ to describe when exponential growth becomes impossible to ignore.

We may be entering the second half soon.

Now, I’m not here to tell you AI will definitely transform everything by 2028.

Predictions are hard, especially about the future.

But what I am suggesting is that our intuitions are the wrong tool for evaluating what’s coming.

Exponentials can appear to be doing little, right up until the moment they’ve changed the world.

The king thought a few grains of rice was a trivial request. He was wrong.

Don’t make the same mistake with AI.

Regards,

Charlie Ormond,

Small-Cap Systems and Altucher’s Investment Network Australia

The post The Problem with Exponentials appeared first on Fat Tail Daily.

daily.fattail.com.au (Article Sourced Website)

#Problem #Exponentials